This blog explores creating a powerful VMware lab using a budget-friendly 24-core workstation, Synology NAS with SSD storage, and advanced networking setup, including a shift from Mikrotik to Fortinet gateways. A hands-on guide for cost-effective, enterprise-ready cloud migration and virtualization.

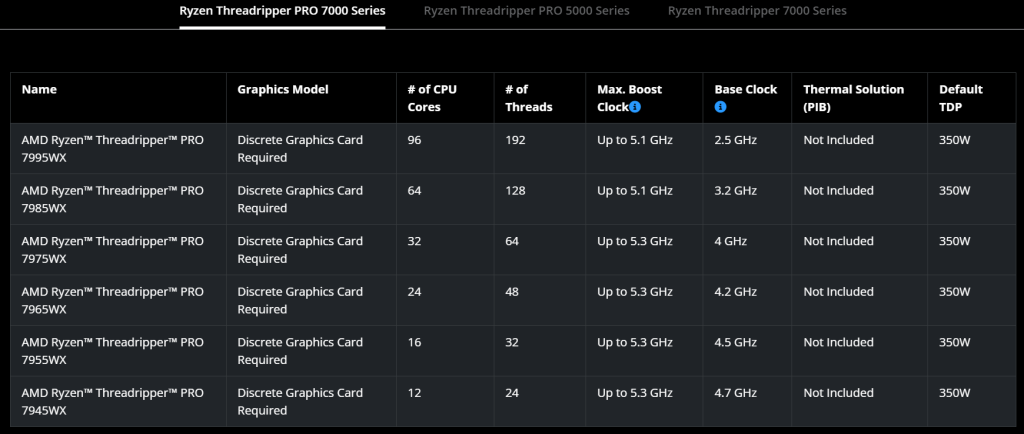

Back in 2021, I was really excited about running a nested virtualized VMware LAB on a creator workstation with 128GB of RAM and 24 logical cores. In terms of computing power (CPU and RAM), I am super impressed with the AMD Ryzen™ Threadripper™ PRO 7995WX, boasting 96 cores. However, I’d probably have a much longer conversation with my wife if I accidentally ordered this CPU from our shared bank account, so for the sake of family peace, I decided against it. I can still make do with my 24-core, 3-year-old architecture to keep my hands-on experience with the latest technologies up to date, especially in a hybrid environment, using my lab as a source for migration and modernization demos.

Maybe one day, when prices drop into the more affordable range – like with some of my retro computer builds based on flagship processors from the 90s and 00s – I’ll consider the AMD Ryzen™ Threadripper™ Processors.

AMD Ryzen™ Threadripper™ Processors

One challenge with VMware Workstation or Hyper-V-based local disks (even if they’re shared) is that they’re not real storage solutions. Personally, I prefer having shared volumes like iSCSI, NFS, or CIFS/SMB to support VMware vSphere, Hyper-V, or any type of containers/Kubernetes labs.

So, the question was: how can I provide real storage to these nested virtualized nodes? The solution I came up with was to invest in an SSD-based Synology system with 64GB of RAM and 10GB NICs.

This setup allows Synology to run some VMs (such as network gateway appliances, domain controllers, and databases) that need to be always on, while also providing iSCSI, NFS, or CIFS/SMB over a 10GB connection to the single server running all the nested clusters. It might seem strange to have just one storage system and one workstation, but trust me – this can function like a large production data center in many scenarios.

I’m not entirely sure why gamers need a 10G CAT8 SFTP patch cable (Vention 40Gbps, 2000MHz), but it works great for connecting my workstation and NAS.

This is my 3-year-old budget-friendly (marriage-approved) Workstation choice.

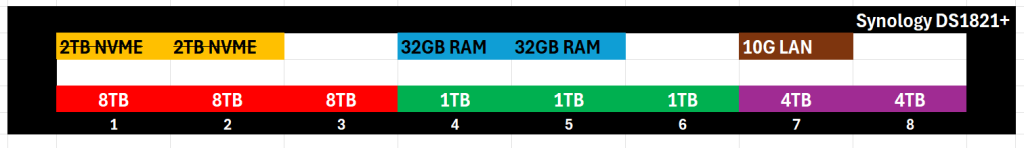

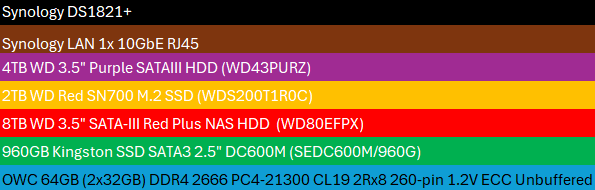

I went with an 8-disk setup, ordering the DiskStation® DS1821+ from Synology, and adding the E10G18-T1 NIC for direct crosslinking with my workstation running the entire nested virtualized datacenter.

When it came to RAM, I apologize to Synology for not purchasing memory modules from their store. I found larger 64GB modules at a much better price, so I went with OWC, which worked perfectly fine.

As for the disks, I decided on the following layout:

Kingston Enterprise-grade SSDs were more than sufficient for the VMs, and currently, I don’t need a cache for the RED array (used for archiving).

Unboxing all this was so much fun!

As you can see, the system comes with 4x1G LAN ports by default.

So, I installed everything.

This isn’t my first Synology setup, and it definitely won’t be my last. I’m quite familiar with how to install the disks.

Adding RAM and the 10G NIC was pretty straightforward.

The final result looks like this:

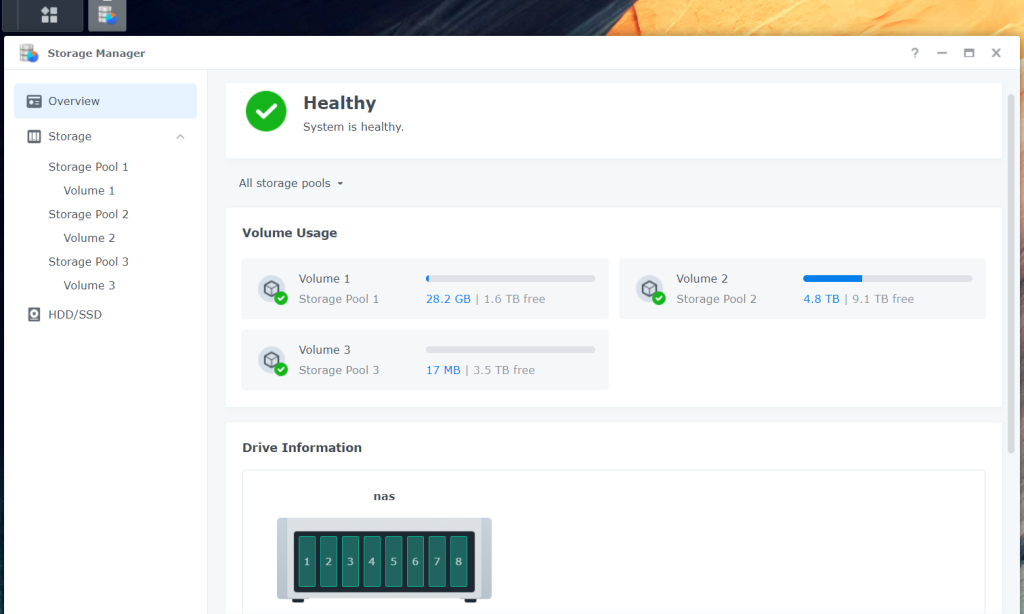

I went with RAID 5 for the pools, with one volume for each. Over time, I’ll probably tweak this setup, especially when I introduce iSCSI over 10G to the workstation PC.

That’s it for now! In my next blog article, I’ll dive into building the best lab for incredible performance at an affordable price.

Most recently, I used this lab to test VMware alternatives like Windows Server 2025 and System Center 2025 VMM, Windows Admin Center, Azure ARC, Azure Stack HCI, and I’m eagerly awaiting the newly announced Azure Local solution.

I’m planning on setting up Kubernetes clusters and am especially excited about deploying Red Hat OpenShift.

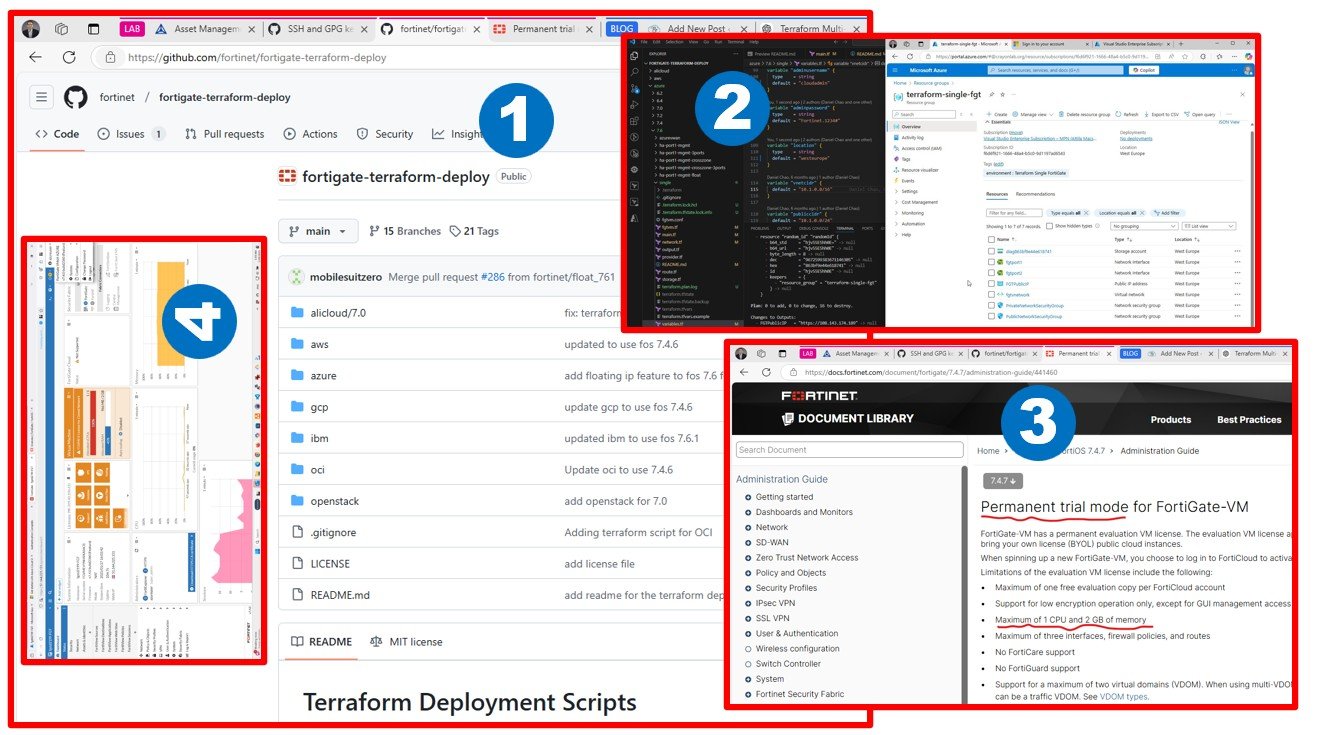

I plan to deploy Fortinet gateways, as they are more commonly used and enterprise-ready compared to my current Mikrotik-based VPN setup (connecting my lab to Azure, AWS, and GCP).

I’ll approach this lab in a repeatable, automated way using DevOps, likely leveraging Terraform providers and Ansible if in-guest provisioning is necessary.

Great things ahead for the holidays! But of course, family comes first. I’ll do my best, so stay tuned!

Happy Holidays!

Attila