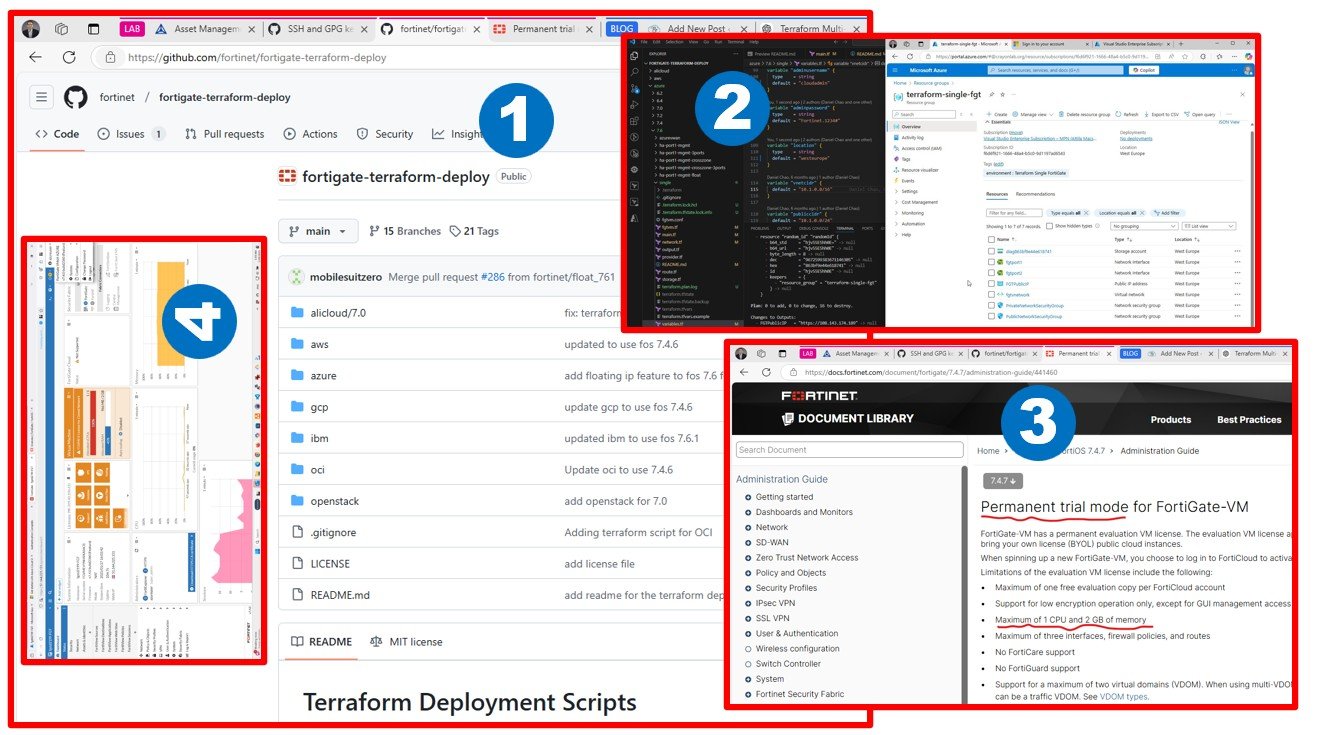

I have generated Terraform codes for the same Ubuntu VM with OpenAI’s Chat GPT-4 and compared the VM prices per region in a fancy table. HCL code results are quite accurate, however, I would not trust price comparison due to outdated information. You can get adjustable results in minutes saving lots of human research time.

ChatGPT use cases to support working with multi-cloud environments

In this article, I tried only scenarios #2 (generate Terraform codes) and #7 (price comparison) from the list below:

I remember I got an interview question some time ago – the answer is obviously no

The response from GPT-4 is very nice and gives you an idea of how a software architect should structure the folders in case of multi-cloud deployments:

Cross-platform orchestration: generate Terraform code for multi-cloud deployments

My goal was the simplest possible, let’s have a simple Ubuntu VM in Clouds.

We got this code for AzureRM, “Standard_D2_v4” # 2 vCPU, 8 GB RAM is pretty nice and source_image_reference is quite accurate.

provider "azurerm" {

features {}

}

resource "azurerm_resource_group" "example" {

name = "example-resources"

location = "West US 2"

}

resource "azurerm_virtual_network" "example" {

name = "example-network"

address_space = ["10.0.0.0/16"]

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

}

resource "azurerm_subnet" "example" {

name = "internal"

resource_group_name = azurerm_resource_group.example.name

virtual_network_name = azurerm_virtual_network.example.name

address_prefixes = ["10.0.2.0/24"]

}

resource "azurerm_network_interface" "example" {

name = "example-nic"

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

ip_configuration {

name = "internal"

subnet_id = azurerm_subnet.example.id

private_ip_address_allocation = "Dynamic"

}

}

resource "azurerm_linux_virtual_machine" "example" {

name = "example-vm"

resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location

size = "Standard_D2_v4" # 2 vCPU, 8 GB RAM

admin_username = "ubuntu"

network_interface_ids = [azurerm_network_interface.example.id]

disable_password_authentication = true

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

publisher = "Canonical"

offer = "UbuntuServer"

sku = "18.04-LTS"

version = "latest"

}

admin_ssh_key {

username = "ubuntu"

public_key = file("~/.ssh/id_rsa.pub")

}

}

GCP’s main.tf is equally good. We got the same image as Azure. “ubuntu-os-cloud/ubuntu-1804-lts”

I think “n1-standard-2” # 2 vCPU, 7.5 GB RAM is a good attempt, we do not need a GCP custom VM type to meet the 8GB RAM requirement precisely.

provider "google" {

project = "<YOUR_PROJECT_ID>"

region = "us-central1"

zone = "us-central1-a"

}

resource "google_compute_instance" "example" {

name = "example-vm"

machine_type = "n1-standard-2" # 2 vCPU, 7.5 GB RAM

boot_disk {

initialize_params {

image = "ubuntu-os-cloud/ubuntu-1804-lts"

}

}

network_interface {

network = "default"

access_config {

// Ephemeral IP

}

}

service_account {

scopes = ["userinfo-email", "compute-ro", "storage-ro"]

}

}

The AWS code (first attempt) is surprisingly short compared to public HCL examples.

aws_instance | Resources | hashicorp/aws | Terraform Registry

I missed the example of using AMI lookup and network and credit specifications, but still, not bad. “t2.large” # 2 vCPU, 8 GB is what I asked for.

provider "aws" {

region = "us-west-2"

}

resource "aws_instance" "example" {

ami = "ami-0c55b159cbfafe1f0" # Ubuntu 18.04 LTS - us-west-2

instance_type = "t2.large" # 2 vCPU, 8 GB

Let’s ask for a correction adding what I missed

provider "aws" {

region = "us-west-2"

}

data "aws_vpc" "default" {

default = true

}

data "aws_subnet" "default" {

availability_zone = "us-west-2a"

default_for_az = true

}

data "aws_security_group" "default" {

name = "default"

vpc_id = data.aws_vpc.default.id

}

data "aws_ami" "ubuntu" {

most_recent = true

owners = ["099720109477"] # Canonical

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

}

resource "aws_instance" "example" {

ami = data.aws_ami.ubuntu.id

instance_type = "t2.large" # 2 vCPU, 8 GB RAM

key_name = "your_key_pair_name"

vpc_security_group_ids = [data.aws_security_group.default.id]

subnet_id = data.aws_subnet.default.id

tags = {

Name = "example-vm"

}

}

Wohoo, I have the AMI lookup now and this is using the same as other clouds.

“ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-*”

Regarding Oracle Cloud, we got nothing because the provider is officially called Oracle Cloud Infrastructure. Fair enough.

This is what ChatGCP generated

provider "oci" {

tenancy_ocid = "<TENANCY_OCID>"

user_ocid = "<USER_OCID>"

fingerprint = "<FINGERPRINT>"

private_key_path = "<PRIVATE_KEY_PATH>"

region = "us-ashburn-1"

}

data "oci_identity_availability_domains" "ads" {

compartment_id = "<COMPARTMENT_OCID>"

}

resource "oci_core_virtual_network" "example" {

cidr_block = "10.0.0.0/16"

compartment_id = "<COMPARTMENT_OCID>"

display_name = "example-vcn"

dns_label = "examplevcn"

}

resource "oci_core_subnet" "example" {

availability_domain = data.oci_identity_availability_domains.ads.availability_domains[0].name

cidr_block = "10.0.1.0/24"

compartment_id = "<COMPARTMENT_OCID>"

display_name = "example-subnet"

dns_label = "examplesubnet"

security_list_ids = [oci_core_security_list.example.id]

vcn_id = oci_core_virtual_network.example.id

}

resource "oci_core_security_list" "example" {

compartment_id = "<COMPARTMENT_OCID>"

display_name = "example-security-list"

vcn_id = oci_core_virtual_network.example.id

ingress_security_rules {

protocol = "6" # TCP

source = "0.0.0.0/0"

tcp_options {

min = 22

max = 22

}

}

}

data "oci_core_images" "ubuntu" {

compartment_id = "<COMPARTMENT_OCID>"

operating_system = "Ubuntu"

operating_system_version = "18.04"

shape = "VM.Standard2.2" # 2 vCPU, 15 GB RAM

sort_by = "TIMECREATED"

sort_order = "DESC"

}

resource "oci_core_instance" "example" {

availability_domain = data.oci_identity_availability_domains.ads.availability_domains[0].name

compartment_id = "<COMPARTMENT_OCID>"

display_name = "example-vm"

shape = "VM.Standard2.2" # 2 vCPU, 15 GB RAM

agent_config {

is_monitoring_disabled = false

}

create_vnic_details {

subnet_id = oci_core_subnet.example.id

display_name = "example-vnic"

assign_public_ip = true

}

source_details {

source_type = "image"

source_id = data.oci_core_images.ubuntu.images[0].id

}

metadata = {

ssh_authorized_keys = "<YOUR_PUBLIC_SSH_KEY>"

}

}

Haha, there is the exact match for sure. OCI presales people keep talking about funky VM configurations using Flexible Shapes: a flexible shape is a shape that lets you customize the number of OCPUs and the amount of memory when launching or resizing your VM. The image is good and has the same OS version.

Price comparison in a fancy table

Asking for price comparison from AI can result in quite outdated pricing information, however, it’s quite fun to see how small the difference is in EUR for the same VM (probably this is similar today).

Obviously, you are not using the public cloud for VM hosting only, so this example is disconnected from real-world pricing exercises.

And it is amazing to see easy to ask for additional things

As a conclusion, I am impressed with GPT-4 capabilities to support working in a multi-cloud environment. I used to work on a DevOps project recently and I have written the HCL code by myself following the design patterns our agile team agreed on, however, I can imagine that lots of cloud software engineers (developers) will use AI to support their work (save time).