This blog explores deploying FortiGate VM in Azure, tackling challenges like license restrictions, Terraform API changes, and Marketplace agreements. It offers insights, troubleshooting tips, and lessons learned for successful single VM deployment in Azure. Using an evaluation license combined with B-series Azure VMs running FortiGate is primarily intended for experimentation and is not recommended for production environments.

Who nailed down Terraform-based multi-Cloud Marketplace Deployments Before?

I know individuals who excel in Terraform and deeply understand DevOps. I’ve also come across people who have deployed Azure Marketplace items directly through the portal. However, only a handful of my connections have experience deploying cloud marketplace solutions, especially in a multi-cloud environment. This is an exciting challenge, and I’m eager to dive into it. I hope this article helps save you time and effort if you share similar aspirations.

Forking Public Code Repositories for Terraform Deployments

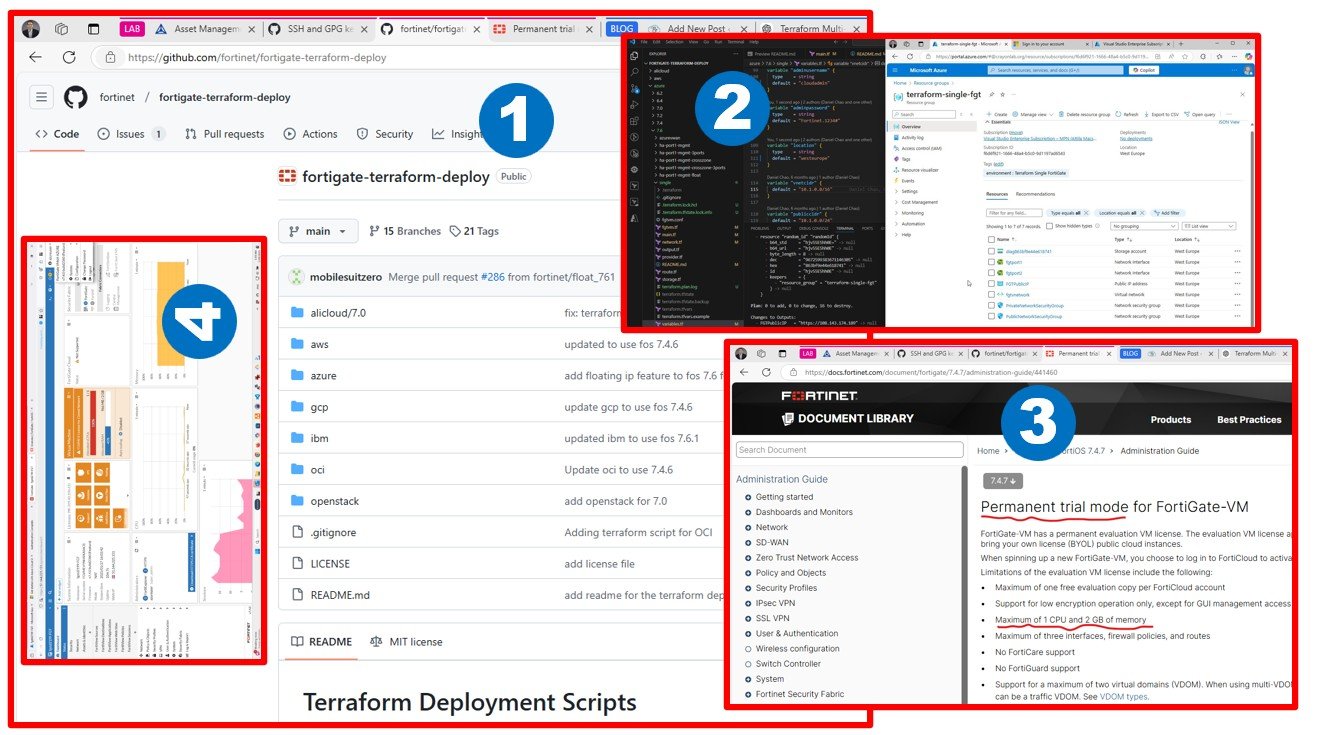

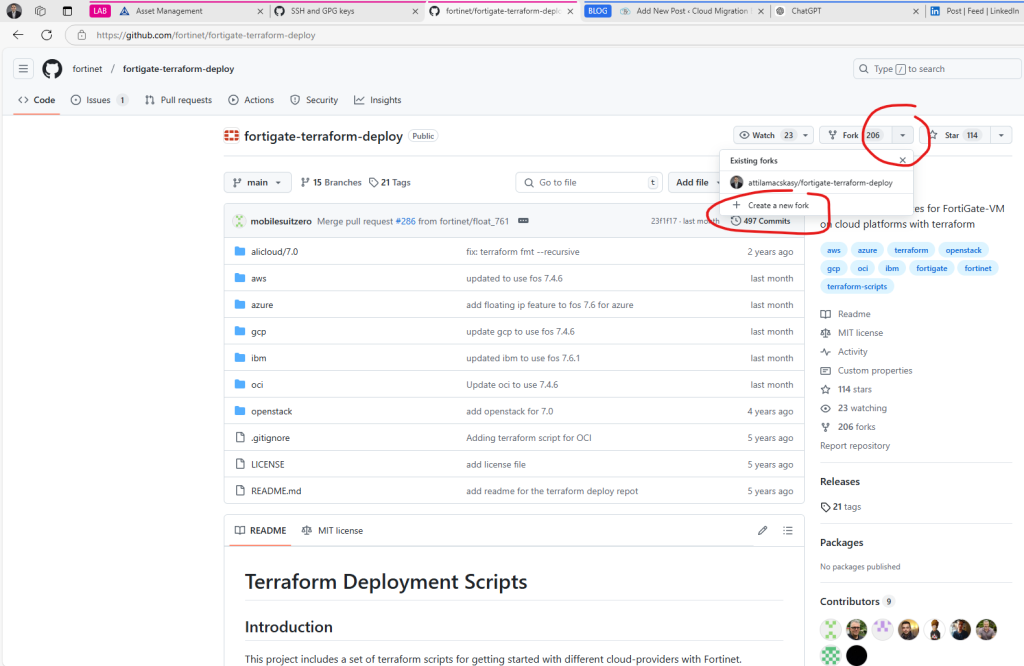

Thanks to Fortinet, we have access to an excellent public repository: fortinet/fortigate-terraform-deploy: Deployment templates for FortiGate-VM on cloud platforms with terraform

This repository provides deployment templates for FortiGate-VM on various cloud platforms using Terraform.

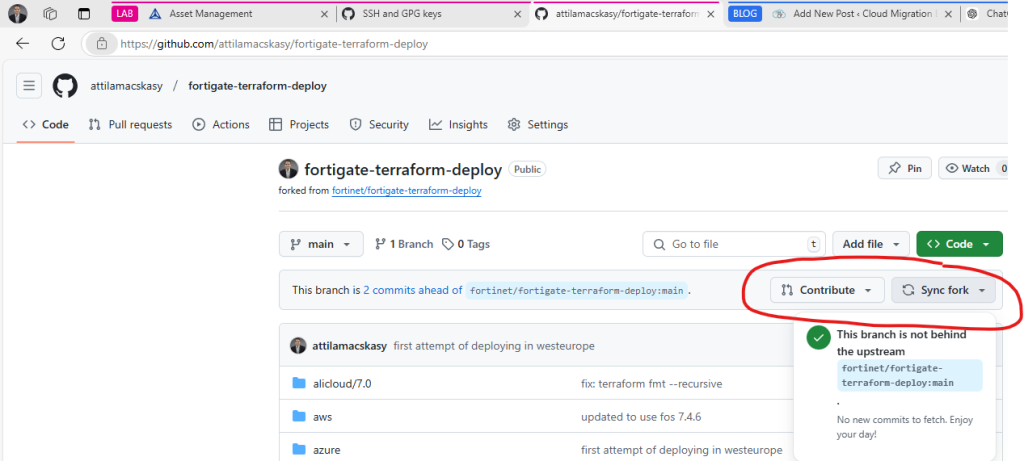

Since you don’t have editor rights to the original GitHub repository (and therefore cannot push changes directly), the best approach is to create a fork in your GitHub account. Forking allows you to make modifications while keeping the original repository intact.

One fantastic feature GitHub offers is the ability to sync with the upstream source. This means you can pull updates from the original repository, merging their changes with your updates to stay aligned. If you make meaningful updates, such as bug fixes, you can propose these changes to the original repository via a pull request. Pretty amazing, right?

For my first attempt, I deployed a single VM using the following path:

In summary, here’s what I gained from the FortiGate (Single VM) deployment process on Azure:

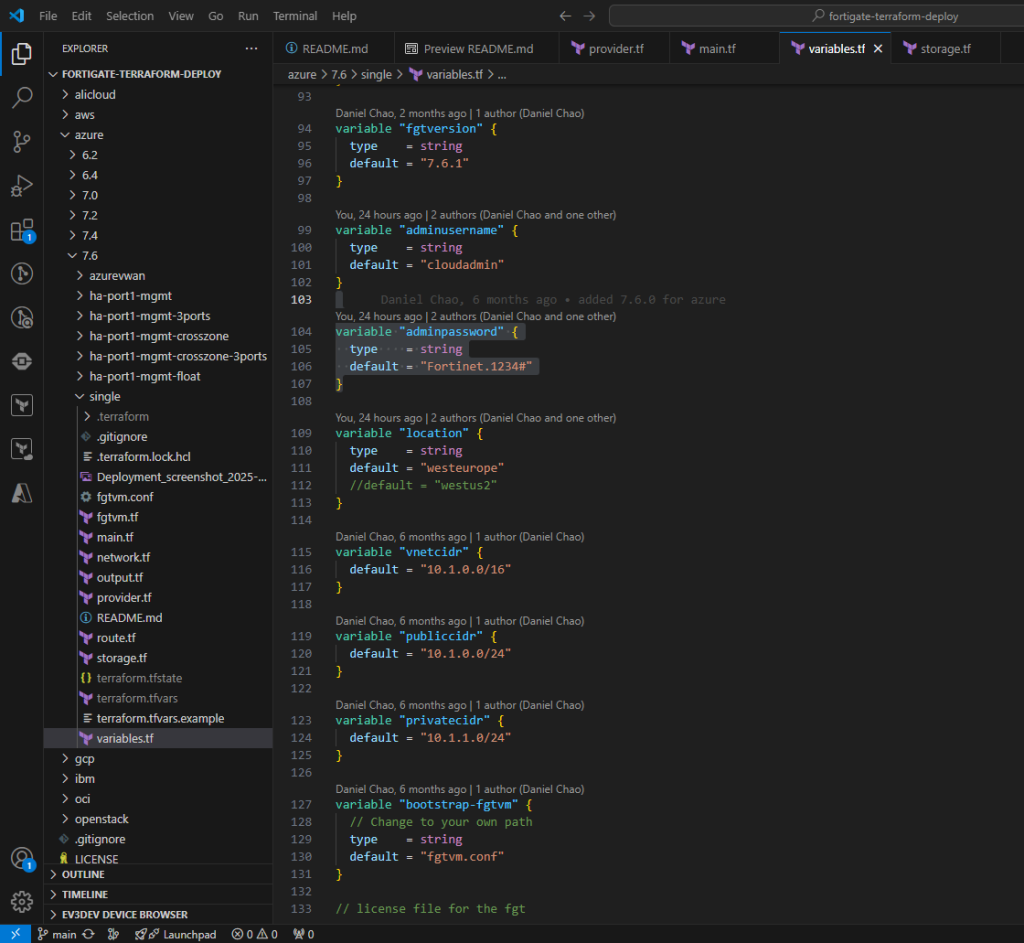

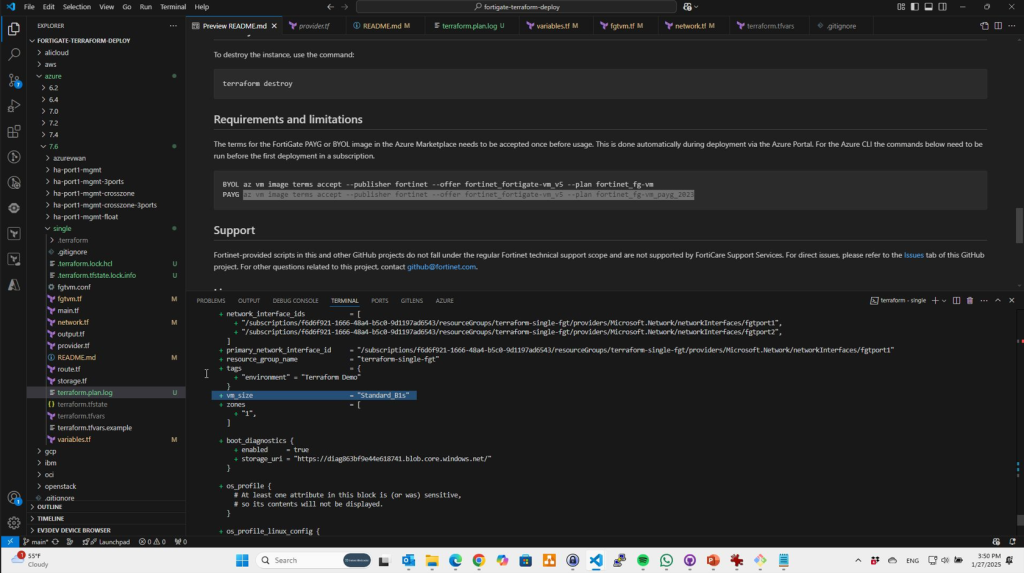

ISSUE #1: Adjusting VM Size in Terraform code to meet FortiGate Evaluation License Requirements (from Standard_F4s_v2 to Standard_B1s)

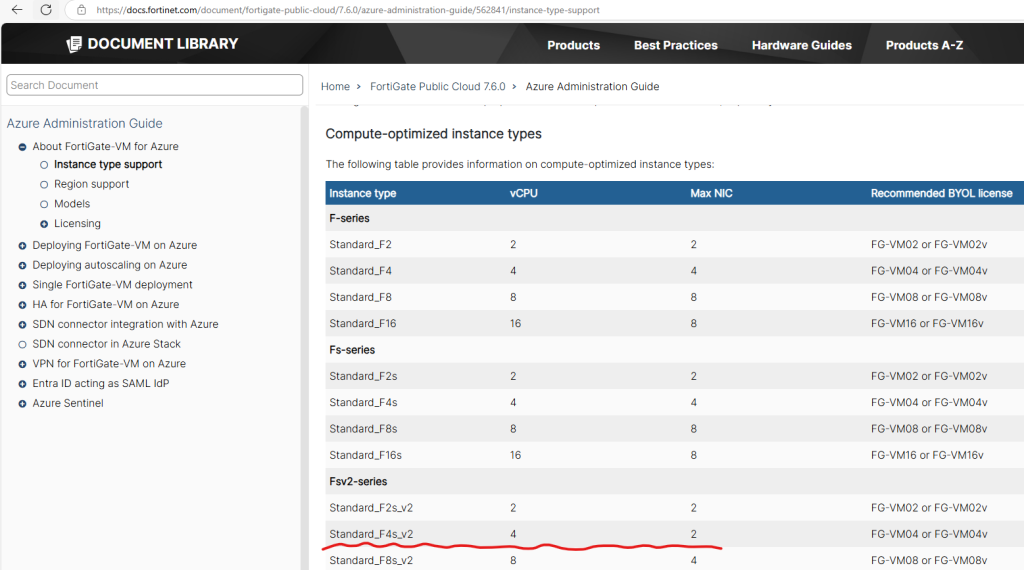

Fortinet documentation recommends using compute-optimized instance types. B-series (burst) Azure VM sizes are not ideal for such usage, however, for experimentation and alignment with permanent trial licensing mode, any 1 CPU VM with a maximum of 2GB RAM will do the job.

Instance type support | FortiGate Public Cloud 7.6.0 | Fortinet Document Library

Currently, I don’t have a license for any Fortinet products, but I still want to test and deploy the solution. This creates a challenge with the provided deployment script, as it uses a VM size (Standard_F4s_v2) that exceeds the limitations of the evaluation license. To work around this, I need to modify the variables.tf file and adjust the VM size to align with the evaluation VM license requirements, such as changing from Standard_F4s_v2 (4 CPU and 8 GB of memory) to Standard_B1s (1 CPU and 2 GB of memory).

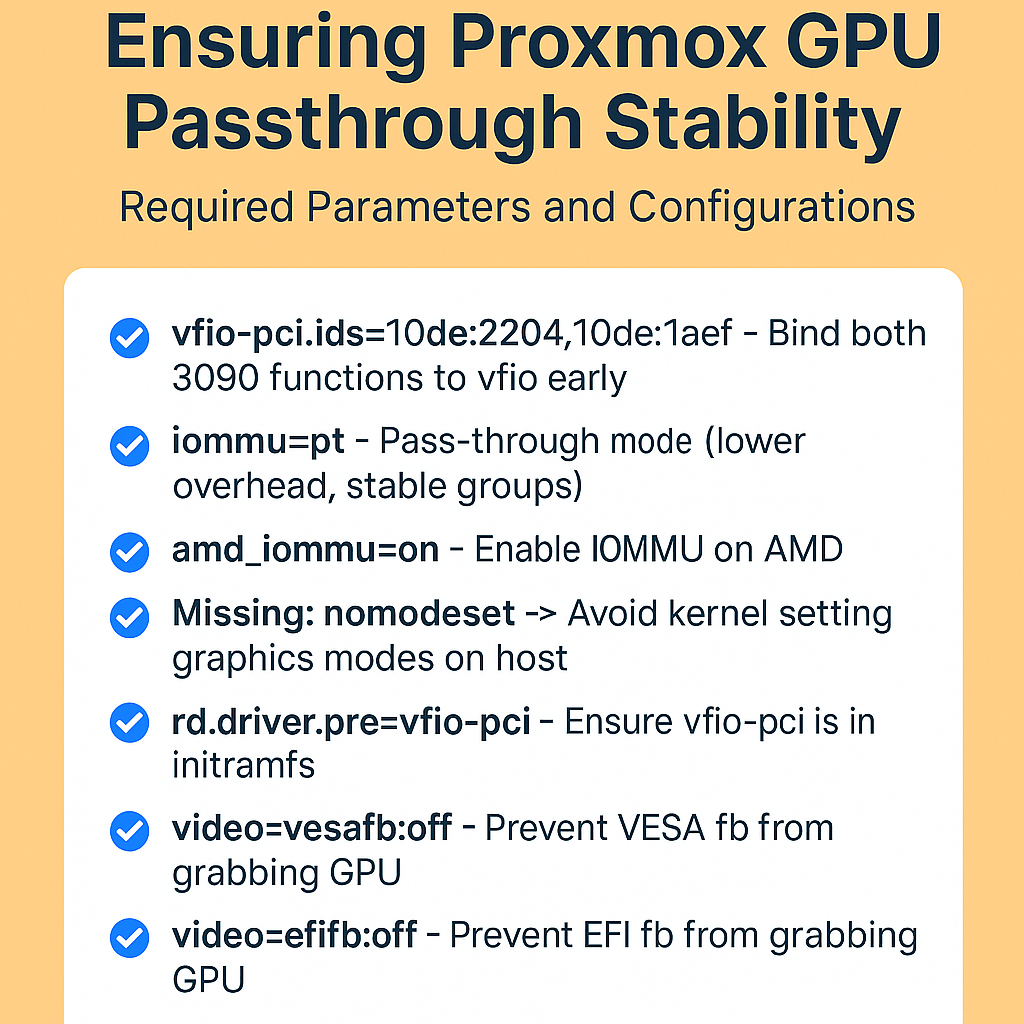

According to the Fortinet article Permanent trial mode for FortiGate-VM | FortiGate / FortiOS 7.4.7 | Fortinet Document Library, the evaluation VM license has specific restrictions, with the most critical ones for deployment being:

- Maximum of One Free Evaluation Copy per FortiCloud Account

This limitation makes deploying HA (High Availability) configurations problematic, as I can only license a single FortiGate instance (though I’ll explore potential workarounds, if available). - Maximum of 1 CPU and 2 GB of Memory

The provided script specifies aStandard_F4s_v2VM size, which has 4 vCPUs and 8 GB of memory – exceeding the evaluation license limits. Adjusting this toStandard_B1sensures compliance with the license restrictions.

By addressing these constraints, I can proceed with testing and deploying the solution within the boundaries of the evaluation license.

...

// x86 - Standard_F4s_v2

// arm - Standard_D2ps_v5

variable "size" {

type = string

//default = "Standard_F4s_v2"

//changed due to limitations in FortiGate EVAL (Maximum of 1 CPU and 2 GB of memory)

default = "Standard_B1s"

}

...ISSUE #2: Observations on Terraform Provider API Changes (as of January 2025).

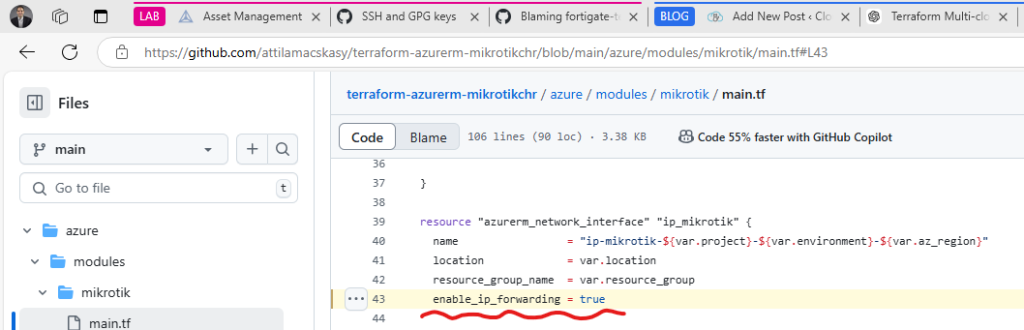

I encountered some minor issues related to the Azure Terraform Provider while working on this project. These challenges aren’t surprising, as Microsoft frequently updates the Azure API, causing corresponding changes in the Terraform resource provider. It’s difficult for codebases to stay fully up to date under these conditions.

While you can mitigate this by specifying Terraform and provider versions in provider.tf, the best approach is to regularly test your code and stay informed about updates. This is one of the small downsides of maintaining DevOps practices – unlike using the Azure Portal, where such issues don’t arise.

For example, in the file Blaming fortigate-terraform-deploy/azure/7.6/single/network.tf at main · attilamacskasy/fortigate-terraform-deploy, the way you configure IP forwarding has changed. IP forwarding is a critical setting for firewall appliance NIC cards and has always been widely used across vendors.

I’ve had to manage similar configurations in other deployments, like the Mikrotik CHR setup I wrote a year ago. Chances are, that code might not work anymore either – something to laugh about as we navigate these ever-changing tools! terraform-azurerm-mikrotikchr/azure/modules/mikrotik/main.tf at main · attilamacskasy/terraform-azurerm-mikrotikchr

Like I said 🙂 My fix is below, regarding fgtport2

resource "azurerm_network_interface" "fgtport2" {

...

//enable_ip_forwarding = true

// An argument named "enable_ip_forwarding" is not expected here.

ip_forwarding_enabled = true

...Another minor issue has surfaced: a new requirement now mandates specifying the storage type for the Azure image. Unsurprisingly, my code faces the same issue, and I’ll get around to fixing it at some point. However, finding a clean way to inject default RSC configuration into Mikrotik remains a challenge. 🙂

Blaming fortigate-terraform-deploy/azure/7.6/single/fgtvm.tf at main · attilamacskasy/fortigate-terraform-deploy

resource "azurerm_image" "custom" {

// The argument "storage_type" is required, but no definition was found.

...

storage_type = "Standard_LRS"

...

}

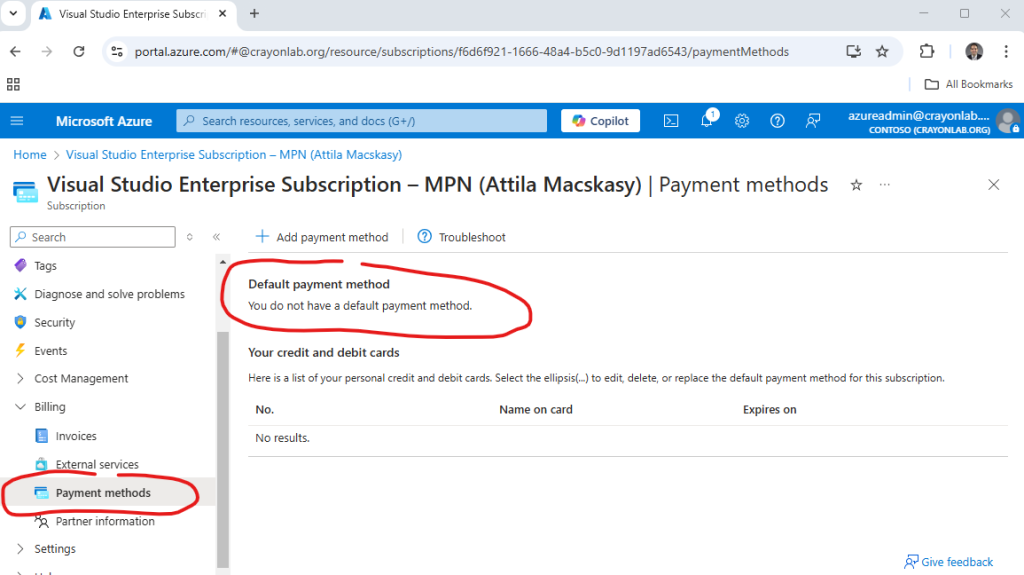

}ISSUE #3: FortiGate VM Deployment Failed Due to Validation Errors (lack of payment method)

Error: creating/updating Virtual Machine (Subscription: "f6d6f921-1666-48a4-b5c0-9d1197ad6543"

│ Resource Group Name: "tf-single-fgt-001"

│ Virtual Machine Name: "fgtvm"): performing CreateOrUpdate: unexpected status 400 (400 Bad Request) with error: ResourcePurchaseValidationFailed: User failed validation to purchase resources. Error message: 'Offer with PublisherId: 'fortinet', OfferId: 'fortinet_fortigate-vm_v5' cannot be purchased due to validation errors. For more information see details. Correlation Id: '61ff72d3-3752-b363-d763-4374600b9f20' The 'unknown' payment instrument(s) is not supported for offer with OfferId: 'fortinet_fortigate-vm_v5', PlanId 'fortinet_fg-vm_payg_2023_g2'. Correlation Id '61ff72d3-3752-b363-d763-4374600b9f20'.'

│

│ with azurerm_virtual_machine.fgtvm[0],

│ on fgtvm.tf line 76, in resource "azurerm_virtual_machine" "fgtvm":

│ 76: resource "azurerm_virtual_machine" "fgtvm" {Terraform apply finally works! I’m breaking out the non-alcoholic champagne to celebrate. However, just as it begins deploying the last two resources (VM and NIC) for the Fortinet OS Linux VM, I hit a roadblock, again… 🙁

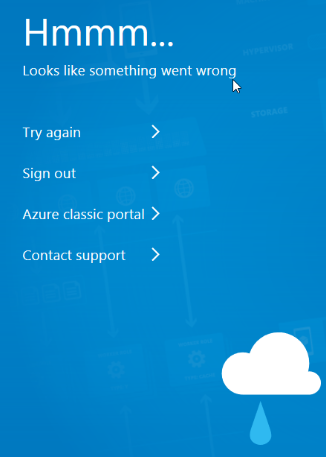

I can’t blame the authors; this is likely a user error. Imagine encountering the error message at ~1 AM – definitely not something you’d want to see on your screen at that hour. It reminds me of one of my all-time favorite Azure error messages:

Let’s break down the error message…

bla bla bla ‘Offer with PublisherId: ‘fortinet’, OfferId: ‘fortinet_fortigate-vm_v5’ cannot be purchased due to validation errors. bla bla bla The ‘unknown’ payment instrument(s) is not supported for offer with OfferId: ‘fortinet_fortigate-vm_v5’, PlanId ‘fortinet_fg-vm_payg_2023_g2′. Correlation Id ’61ff72d3-3752-b363-d763-4374600b9f20′.’ with azurerm_virtual_machine.fgtvm[0], on fgtvm.tf line 76 bla bla bla

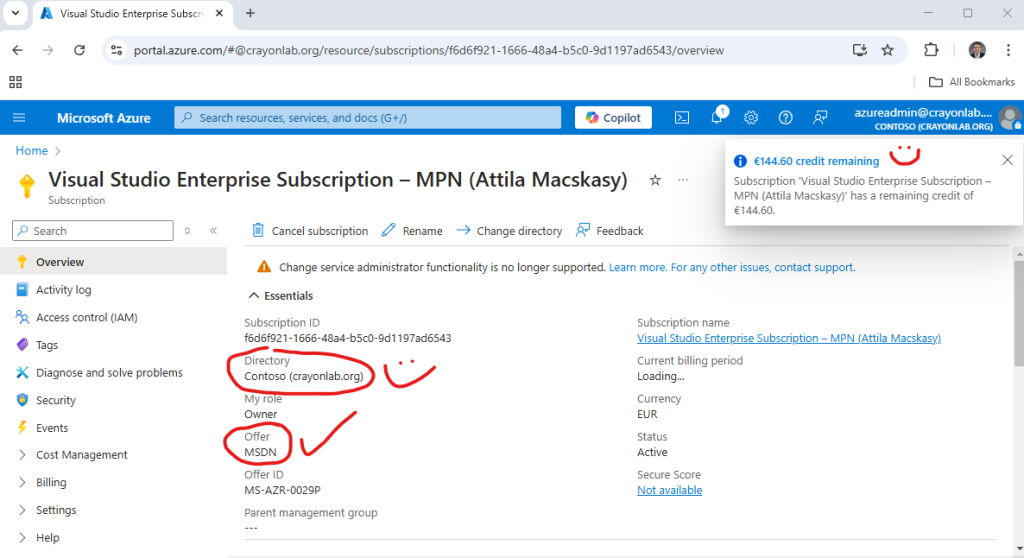

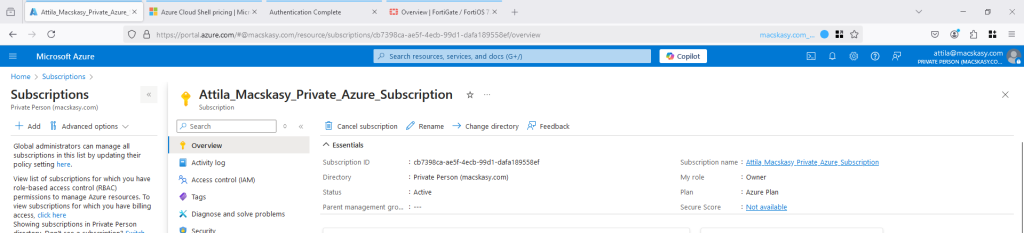

Hmm… While I’m accustomed to investing my own money in self-education, no matter the cost, this time I decided to leverage Microsoft and Crayon’s resources (well, money, haha). I used my amazing multi-cloud lab for this deployment.

With Visual Studio and MSDN at my fingertips, what more could you possibly need for limitless learning excitement? Well, perhaps a slight bending of the rules with M365/Contoso E5 users by leveraging Microsoft’s CDX demo tenant (despite those pesky restrictions about not using it for learning or education). Ah, the joys of navigating legal fine print…

But wait a minute – while I’m leveraging others’ resources, who’s paying the bill for this lovely PAYG Fortinet Marketplace item? Let’s not forget the error message: ‘unknown’ payment instrument(s).

Aha! No payment method – that’s the issue. Turns out, it’s my problem too, as I wasted time troubleshooting this unnecessary error. Time to switch over to my private production Azure account – this time, I’m footing the bill.

Don’t report to my wife!

Actually, she looks much better and nicer than DALL-E imagines.

Wohoo! The same deployment worked perfectly here without any error messages.

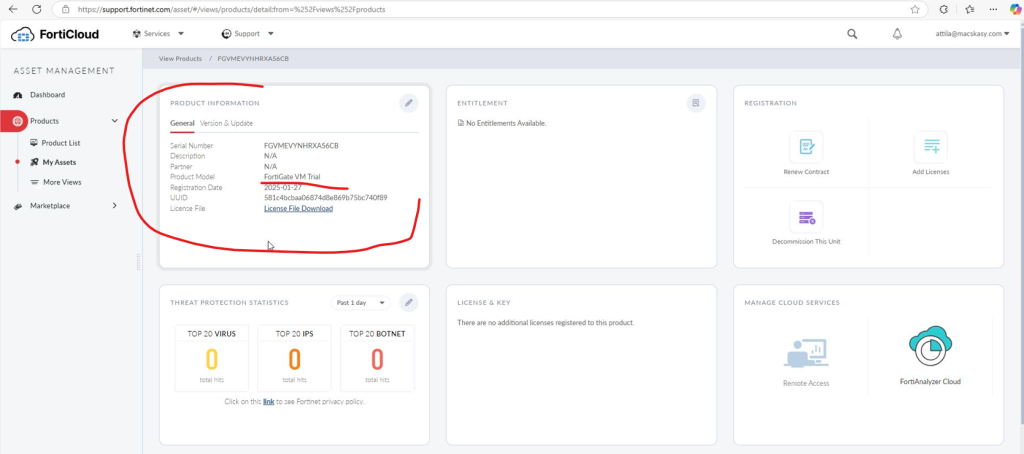

HINT #1: Assign licensing (Permanent trial mode)

Follow this: Permanent trial mode for FortiGate-VM | FortiGate / FortiOS 7.4.7 | Fortinet Document Library

HINT #2: Use unique deployment parameters (variables.tf)

Use unique parameters to avoid reserved names and DNS conflicts in deployment.

HINT #3: Where is the password?

I’m hoping to discover a new version where the adminpassword for FortiGate is securely injected as an environmental variable, ideally retrieved from Azure Key Vault in an encrypted manner – just as it should be. This would allow the deployment to run smoothly as part of an Azure Landing Zone deployment, with FortiGate integrating into the Hub networking setup instead of relying on the Azure Virtual Network Gateway.

HINT #4: Azure Marketplace Agreement and Terraform State Don’t Get Along

Keep in mind: This agreement only needs to be accepted once per subscription.

You need to accept the Azure Marketplace item as outlined in the documentation, either before or during deployment. This can be done using AZ CLI or Terraform. If you handle it in Terraform and redeploy, you’ll need to import it into the state or reference it as a data block. However, when you destroy the deployment in Terraform, the acceptance remains active, which can cause your next deployment attempt to fail. Not exactly a fun experience.

azurerm_marketplace_agreement | Resources | hashicorp/azurerm | Terraform | Terraform Registry or

BYOL az vm image terms accept --publisher fortinet --offer fortinet_fortigate-vm_v5 --plan fortinet_fg-vm

PAYG az vm image terms accept --publisher fortinet --offer fortinet_fortigate-vm_v5 --plan fortinet_fg-vm_payg_2023AZ CLI worked for me:

The same thing in Terraform looks like this:

resource "azurerm_marketplace_agreement" "fortinet" {

publisher = "fortinet"

offer = "fortinet_fortigate-vm_v5"

plan = "fortinet_fg-vm_payg_2023"

}Summary and next steps

Now, let’s go back to the beginning and cover everything you need to know to successfully complete this deployment. This time, I’ll try an HA deployment (even though I don’t have a license yet).”

In my next blog article, I’ll focus on a step-by-step deployment guide, with less emphasis on troubleshooting.