This blog explores multi-cloud networking challenges, highlighting a real-world Azure-to-AWS modernization project. It emphasizes the importance of next-generation firewall marketplace appliances like FortiGate for standardization, secure connectivity, and automation, while addressing tools like Terraform and Python for seamless multi-cloud integration.

Standardizing Networking in Multi-Cloud Architectures

As a multi-cloud architect, I’ve long been intrigued by the challenge of standardizing networking in multi-cloud environments. Each cloud provider has its own terminology and approach to managing virtual networks—VNet in Azure, VPC in AWS, and similar concepts in other platforms. Despite these differences, they all refer to the same foundational networking principles.

When designing multi-cloud solutions, the goal is to standardize as much as possible. This reduces the need to understand the unique configurations and nuances of each provider, streamlining management and deployment. For example, Kubernetes has gained immense popularity compared to services like Azure Functions or AWS Lambda. The primary reason is its consistency across cloud platforms, which allows resources to move freely and avoids vendor lock-in.

Networking plays a similarly pivotal role in cloud adoption frameworks across providers. It’s a fundamental component of landing zones, and standardization here is critical. The concept of hub-and-spoke networking, for instance, has been around for some time. It provides a flexible architecture where you can leverage either native cloud network gateways or third-party appliances like Fortinet, Check Point, or Palo Alto Networks.

One key advantage of standardizing networking connectivity across clouds and on-premises environments is simplifying management. This becomes especially valuable when security vendors offer unified management for both cloud and on-premises firewall appliances. Such solutions not only make networking management easier but also enhance security and operational efficiency.

In conclusion, standardizing networking across multi-cloud and on-premises environments is a strategic move. It aligns with the principles of flexibility, consistency, and simplicity, ensuring that your architecture can scale seamlessly while reducing complexity.

Comparison of basic networking services across clouds

The table below maps equivalent services across Azure, AWS, Google Cloud, Oracle Cloud, and Alibaba Cloud. These resources are essential for deploying virtual network gateways or third-party firewall appliances as virtual machines.

| Resource Type | Azure | AWS | Google Cloud | Oracle Cloud | Alibaba Cloud |

|---|---|---|---|---|---|

| Virtual Network | Virtual Network | Virtual Private Cloud (VPC) | Virtual Private Cloud (VPC) | Virtual Cloud Network (VCN) | Virtual Private Cloud (VPC) |

| Route Table | Route Table | Route Table | Routes | Route Table | Route Table |

| Virtual Machine | Virtual Machine | EC2 (Elastic Compute Cloud) | Compute Engine | Compute Instance | Elastic Compute Service (ECS) |

| Network Interface | Network Interface | Elastic Network Interface (ENI) | Network Interface | Virtual Network Interface Card (VNIC) | Elastic Network Interface (ENI) |

| Network Security Group | Network Security Group | Security Group | Firewall Rules | Network Security Group | Security Group |

| Public IP Address | Public IP Address | Elastic IP (EIP) | External IP | Public IP Address | Elastic IP Address (EIP) |

| (Virtual Network Gateway) | Virtual Network Gateway | Virtual Private Gateway | Cloud VPN | Dynamic Routing Gateway (DRG) | VPN Gateway |

Alibaba Cloud refers to subnets as “VSwitch,” while GCP names its Internet Gateway as “Cloud NAT” for some reason. Here’s a comparison of some additional networking features across providers.

| Networking Feature | Azure | AWS | Google Cloud | Oracle Cloud | Alibaba Cloud |

|---|---|---|---|---|---|

| Private Connectivity | ExpressRoute | Direct Connect | Interconnect/Cloud VPN | FastConnect | Express Connect |

| Load Balancer | Azure Load Balancer | Elastic Load Balancer (ELB) | Cloud Load Balancer | Load Balancer | SLB (Server Load Balancer) |

| DNS Service | Azure DNS | Route 53 | Cloud DNS | DNS Zone Management | Alibaba Cloud DNS |

| Firewall | Azure Firewall | AWS Network Firewall | VPC Firewall Rules | Network Firewall | Cloud Firewall |

| Content Delivery Network | Azure CDN | Amazon CloudFront | Cloud CDN | Oracle Cloud CDN | Alibaba Cloud CDN |

| Peering | VNet Peering | VPC Peering | VPC Network Peering | Local Peering Gateway | VPC Peering |

| DDoS Protection | Azure DDoS Protection | AWS Shield | Cloud Armor | DDoS Protection | Anti-DDoS Basic/Pro |

| Security Groups | Network Security Groups | Security Groups | Firewall Rules | Security List | Security Groups |

| Traffic Management | Traffic Manager | Route 53 Traffic Policies | Traffic Director | Traffic Steering Policies | Global Traffic Manager |

| Global Network Hub | Azure Virtual WAN | AWS Cloud WAN (or AWS Transit Gateway) | Network Connectivity Center | Dynamic Routing Gateway | Cloud Enterprise Network |

What resources are more similar (Public IP, Route Table, Virtual Network and VM)?

Whether you call it a VPN, VNet, or VCN, you’re essentially referring to the same concept: a virtual network. These networks are defined by address spaces that are typically subdivided into smaller subnets, including a gateway subnet. Some IP addresses within these networks are reserved (e.g., .1, .2, .3 in Azure), with the first assignable address often starting from .4. This is due to the nature of public cloud infrastructure.

In Azure, within each subnet, the first four IP addresses and the last IP address are reserved, totaling five reserved addresses per subnet. For example, in a subnet with the address range 192.168.1.0/24, the reserved addresses are:

- 192.168.1.0: Network address

- 192.168.1.1: Reserved by Azure for the default gateway

- 192.168.1.2 and 192.168.1.3: Reserved by Azure to map Azure DNS IP addresses to the virtual network space

- 192.168.1.255: Network broadcast address

These reservations are standard across Azure subnets to ensure proper network functionality and management.

Public clouds rely heavily on DNS for resource identification rather than static IP addresses. Consequently, DHCP is not commonly used in the cloud.

A public IP address is a public IP address across all clouds, even though each provider has its own range of public IP addresses.

Each major public cloud provider allocates distinct public IP address ranges to their services, ensuring no overlap between providers. This design guarantees that a Wide Area Network (WAN) IP address is unique to a specific provider within a particular region. Below is a comparison of the public IP address ranges for several leading cloud providers:

| Cloud Provider | Source |

|---|---|

| Amazon Web Services (AWS) | AWS IP Ranges |

| Microsoft Azure | Azure IP Ranges |

| Google Cloud Platform (GCP) | |

| Oracle Cloud | Oracle Cloud IP Ranges |

| Alibaba Cloud |

Route tables function consistently across cloud platforms, offering a familiar and straightforward way to configure routing. Likewise, a virtual machine in the cloud operates on the same fundamental principles as on-premises virtualization platforms like VMware or Hyper-V. At its core, it’s still about CPU, memory, and disk resources, regardless of the cloud provider.

Route tables function consistently across cloud platforms, offering a familiar and straightforward way to configure routing. Likewise, a virtual machine in the cloud operates on the same fundamental principles as on-premises virtualization platforms like VMware or Hyper-V. At its core, it’s still about CPU, memory, and disk resources, regardless of the cloud provider.

In essence, there’s no mystery involved. The foundational principles of networking are implemented similarly across all cloud platforms. Mastering these fundamentals simplifies working across multiple clouds, enabling seamless integration and management.

Comparing Virtual Network Gateways: Understanding Multi-Cloud Differences in Site-to-Site VPN Configurations

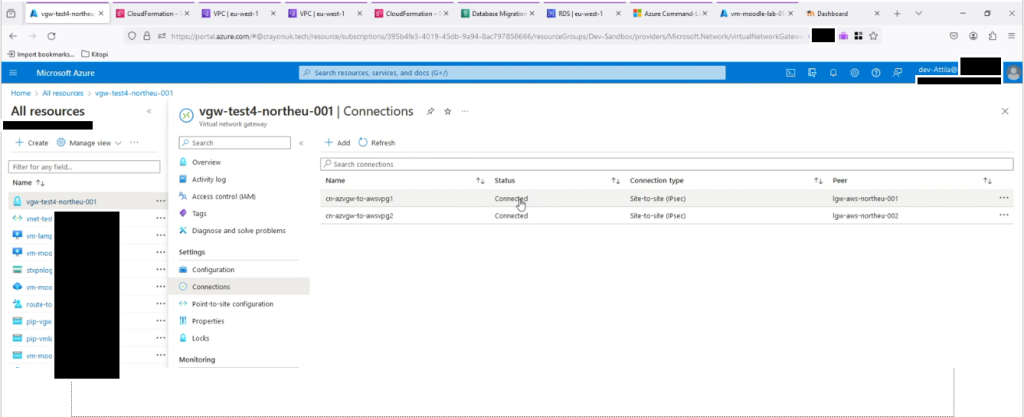

Managing a Virtual Network Gateway differs significantly across Azure, AWS, and GCP due to variations in service design and configuration workflows. For example, when setting up a site-to-site VPN connection, Azure uses the Virtual Network Gateway, which requires you to specify the VPN type (route-based or policy-based), associate it with a VNet, and configure the gateway subnet explicitly. AWS, on the other hand, uses a Customer Gateway and Virtual Private Gateway combination, where you must define the on-premises device details, set up the VPN connection, and manage routes using a route table associated with the VPC. GCP simplifies this with its Cloud VPN, where you create a VPN gateway and define tunnel parameters directly, including shared secrets and on-premises IP ranges, but it requires separate steps to configure the routing mode (dynamic or static). These differences highlight how each provider abstracts complexity differently, with Azure emphasizing detailed configurations, AWS splitting responsibilities between components, and GCP favoring streamlined workflows for rapid deployment. Understanding these nuances is essential for managing multi-cloud networking effectively.

My Real Project Experience Implementing Multi-Cloud Networking: Azure to AWS Migration

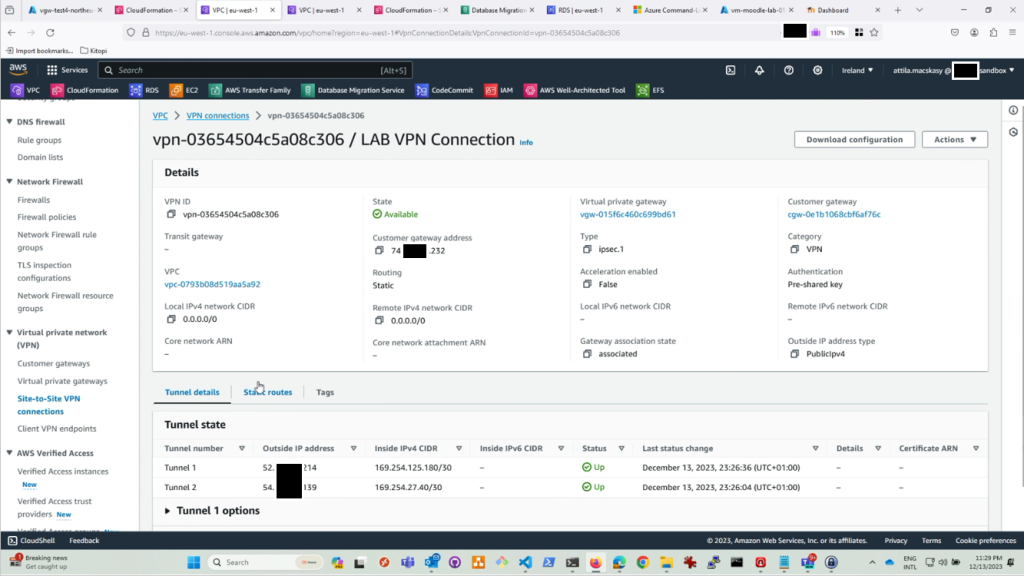

Last year, I worked on an exciting project with my friend from Portugal, Hugo Rodrigues | LinkedIn, where we modernized a Moodle application (similar to WordPress) hosted on a large Azure VM running an Ubuntu LAMP stack. The goal was to migrate this setup to AWS Cloud-Native resources.

In the new architecture, the Ubuntu VM’s Linux file system was replaced with AWS Elastic File System (EFS), and we used AWS Transfer Family to migrate files via remote SSH using the lftp mirror command. It was a fun and rewarding experience. A big shoutout to Hugo (he is amazing), from whom I learned so much, especially when I took on the responsibility of managing the automation and implementation independently. For the LAMP stack’s MySQL database, we used AWS Database Migration Service (DMS) to migrate the data, transforming it into AWS Aurora.

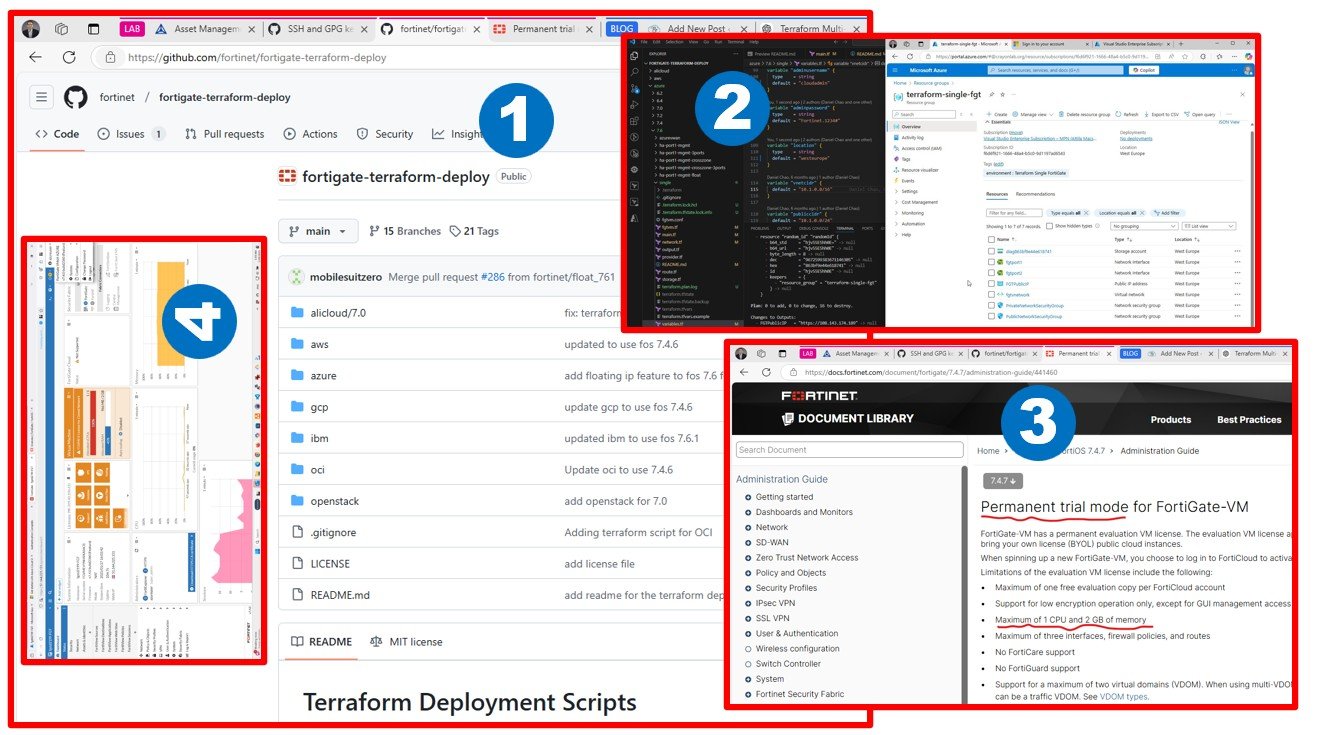

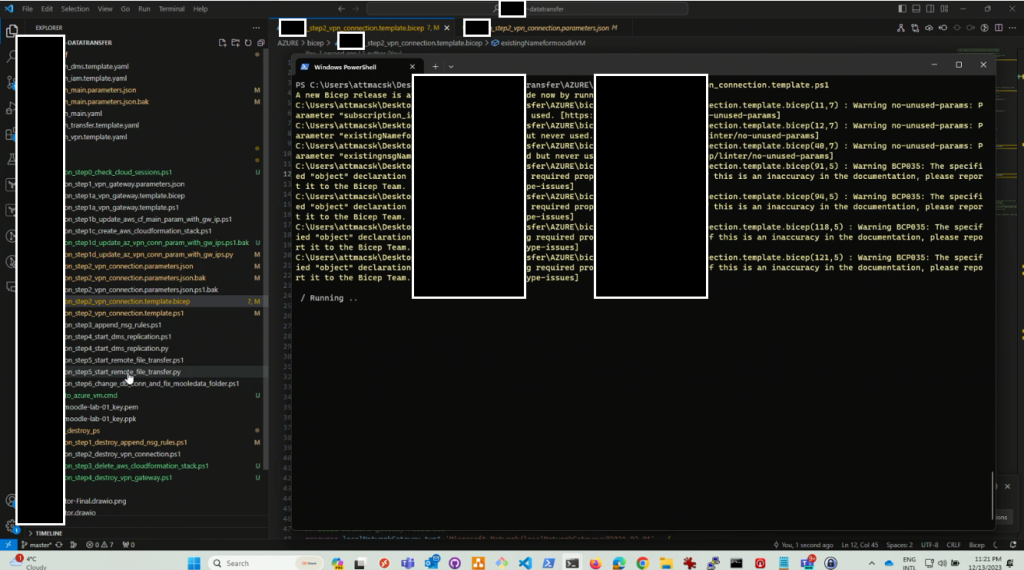

A critical part of the migration process was establishing site-to-site networking connectivity between Azure and AWS, which enabled seamless data transfer for both files and databases. While we initially intended to use Terraform providers for the entire implementation, things became more complex due to differing preferences. The Azure team encouraged me to use Bicep, while the AWS team pushed Hugo to use CloudFormation. Despite these challenges, the solution was successfully implemented. However, the experience highlighted an important lesson: there are always simpler and more efficient ways to get things done when navigating multi-cloud environments.

This is the AWS console view showing the established VPN connection between Azure and AWS, serving as the core foundation for this migration and modernization project.

Running DevOps pipelines that combine Azure Bicep and AWS CloudFormation can be difficult because input and output parameters are not standardized. Even simple tasks, like retrieving the public IP address of an Azure Virtual Network Gateway, turned out to be a bit tricky. On the AWS side, I really enjoyed using boto3 in Python—it made the process easier and was especially fun during the early days of ChatGPT-assisted code generation. Working with PowerShell for YAML and JSON editing was not working for me (such as writing arrays), while Python just worked seamlessly (thanks to its abundance of examples and AI-generated code).

This experience highlighted why Terraform is often a better choice—it provides standardized input and output parameters, making it much easier to work across multiple tools and cloud platforms. It simplifies tasks that otherwise feel unnecessarily complex.

When choosing a gateway or firewall solution, always ensure you have deployment scripts for both the gateway setup and the in-guest configuration of the gateway VM. Both aspects are equally important for a seamless implementation.

Terraform: FortiOS as a provider | FortiGate / FortiOS 6.2.16 | Fortinet Document Library

I really enjoy using Mikrotik, but scaling CHR deployment and configuration has been quite challenging, especially with community support being limited to a best-effort basis.

CHR on Azure – MikroTik inspired me to do this attilamacskasy/terraform-azurerm-mikrotikchr: This Terraform configuration deploys a MikroTik Cloud Hosted Router (CHR) on Microsoft Azure. It includes all necessary Azure resources such as storage accounts, virtual machines, and networking components.

Docs overview | terraform-routeros/routeros | Terraform | Terraform Registry

Standardizing Multi-Cloud Networking with FortiGate: Simplified Management and Enhanced Security

FortiGate marketplace VM in each cloud to standardize your networking setup. This approach not only simplifies the management of site-to-site VPNs by providing a consistent interface across Azure, AWS, and GCP, but it also offers additional benefits. FortiGate appliances deliver advanced security features such as intrusion prevention, deep packet inspection, and centralized firewall management.

By deploying FortiGate VMs in each cloud, you can establish a unified security posture and gain better visibility into traffic patterns across your multi-cloud environment. These appliances also support integration with cloud-native services like AWS Transit Gateway, Azure Virtual WAN, and GCP’s Network Connectivity Center, allowing you to build scalable and secure architectures. Furthermore, the FortiManager platform enables centralized configuration and policy enforcement across on-premises and cloud deployments, streamlining operations and reducing administrative overhead.

This standardized approach not only mitigates the complexity of managing disparate native solutions but also enhances security, performance, and operational efficiency in a multi-cloud scenario.